Dataster Documentation

Dataster helps you build Generative AI applications with better accuracy and lower latency.Automated Evaluation Tests Overview

When developing a GenAI application, ensuring the accuracy of the generated answers is paramount. Accuracy directly impacts user trust and satisfaction, as users rely on the application to provide reliable and correct information. Evaluating the quality of answers involves rigorous testing and validation against a diverse set of queries to ensure the AI can handle various contexts and nuances. High accuracy not only enhances the user experience but also reduces the risk of misinformation, which can have significant consequences. Therefore, continuous monitoring and improvement of answer quality are essential to maintain the application's credibility and effectiveness.

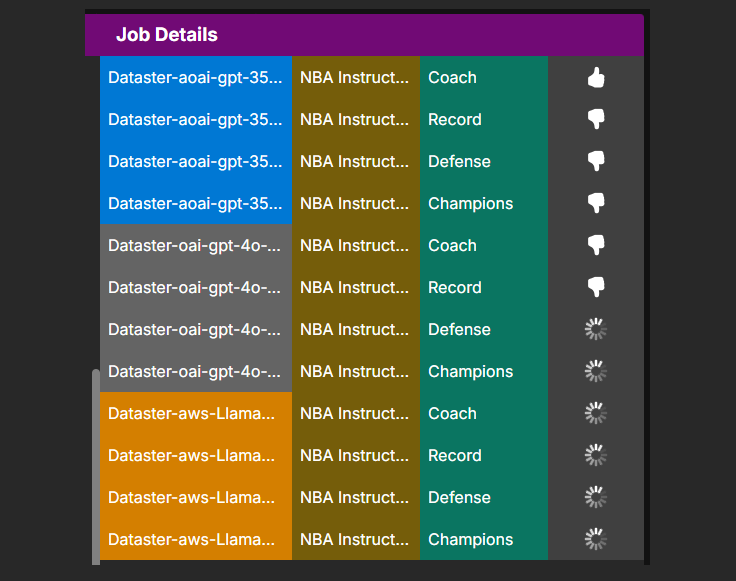

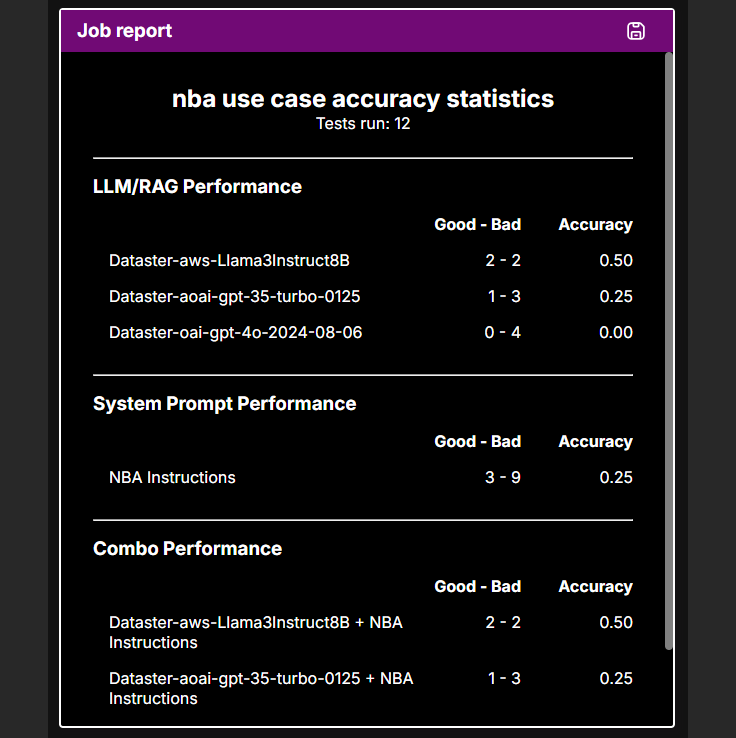

To address the challenge of ensuring high-quality outputs at scale, Dataster offers a comprehensive automated evaluation framework. This framework enables builders to evaluate their use case efficiently by generating and analyzing thousands of responses from various combinations of system prompts, Large Language Models (LLMs), and optionally vector stores for RAG. By doing so, builders can create numerous RAG combinations to determine which configuration consistently delivers the most accurate and reliable answers for their use case and users. This automated approach significantly enhances the speed and efficiency of testing, helping to fine-tune the application to meet the highest standards of answer quality.

This capability works by having an evaluator model, managed by Dataster, compare the output to the ground truth defined alongside the user prompt. The evaluator model assesses the similarity between the output and the ground truth, determining if they could be used interchangeably, and ultimately grades the output in a binary fashion as either good or bad.

Once all the results are received, Dataster compiles statistics for each model, each RAG, and each system prompt, presenting the average score.

Conclusion

Dataster's automated evaluation framework empowers builders to efficiently assess the quality of their GenAI applications' outputs at scale. By enabling extensive testing across various RAG combinations and LLMs, Dataster ensures that developers can identify the optimal setup for delivering accurate and reliable answers. This automated approach significantly enhances speed and efficiency, ultimately contributing to the overall success and credibility of GenAI applications by maintaining high standards of answer quality.

If you encounter any issues or need further assistance, please contact our support team at support@dataster.com.