Dataster Documentation

Dataster helps you build Generative AI applications with better accuracy and lower latency.Latency Tests Overview

GenAI applications fall under multiple categories, including real-time, best effort, and batch-oriented. The most prevalent type of application has been the real-time type. Organizations of all sizes have been building assistants, copilots, or question-and-answer chatbots. For these types of applications, it is crucial to deliver an output back to the user in a timely fashion. Latency can significantly impact the success or failure of a GenAI real-time application. To assess the expected latency of their application, builders often rely on public benchmarks for a given set of models. However, the value of these latency benchmarks is limited for several reasons. First, the input prompts used in the benchmarks differ significantly from the actual prompts used for a specific enterprise use case. Second, if the builder adds Retrieval-Augmented Generation (RAG) to the equation and their arbitrary vector store, the benchmarks become even less relevant.

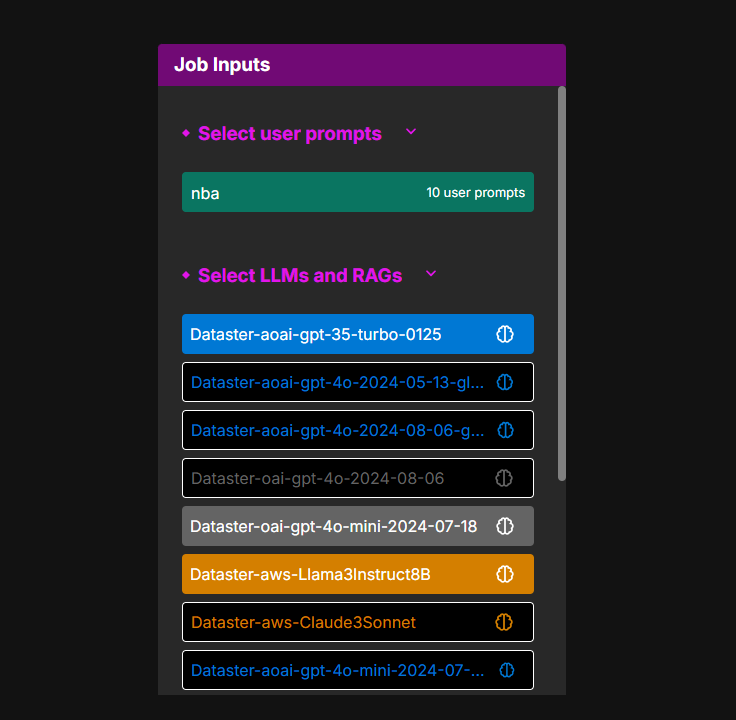

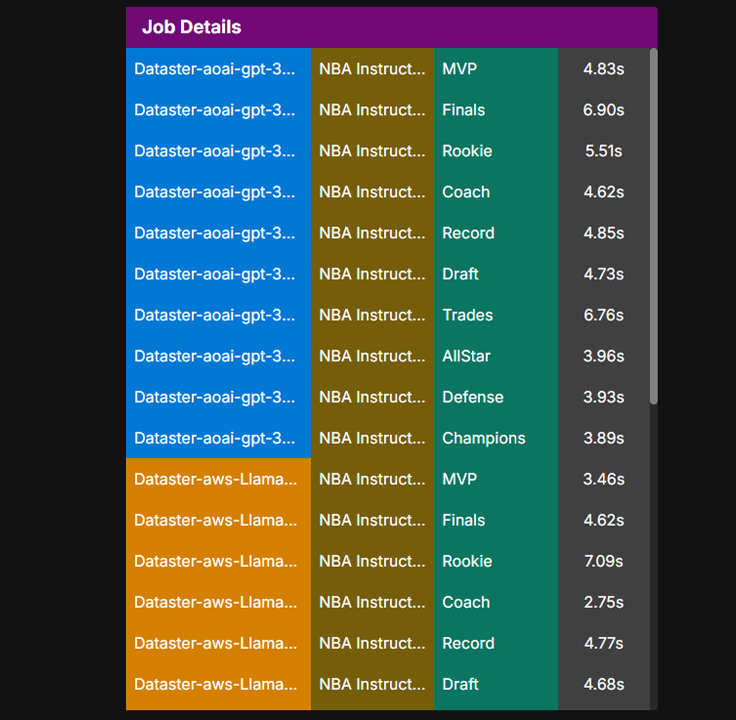

To tackle this problem, Dataster provides a robust latency test framework that allows builders to test their use case at scale by sending hundreds of user prompts to various combinations of system prompts, Large Language Models (LLMs), and optionally vector stores for RAG. This way, the builder can create as many RAG combinations as necessary to determine which one will deliver the best latency for their use case and their users.

This capability works by sending requests to the selected RAGs and LLMs in parallel and individually measuring the time it takes to receive the full output.

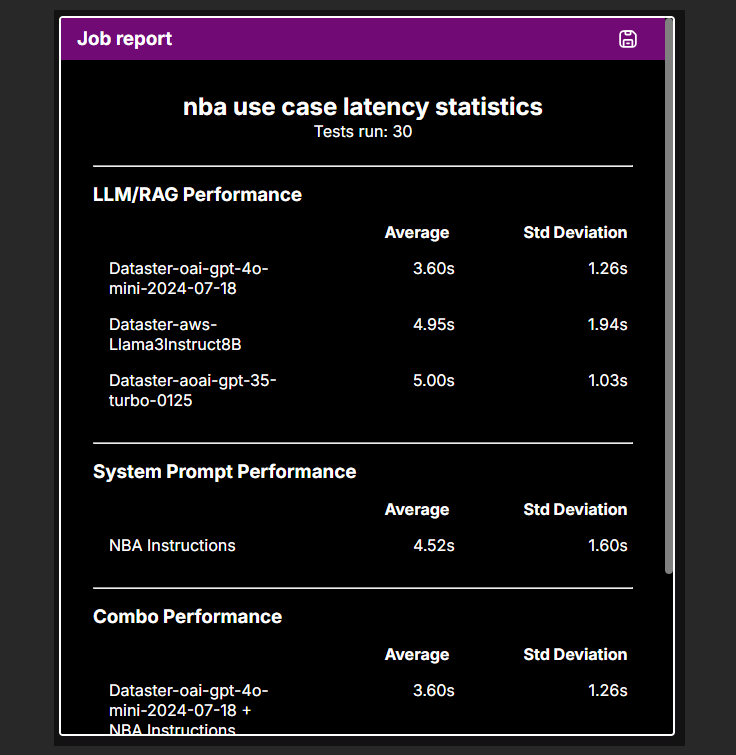

When all the results are received, Dataster compiles statistics for each model, each RAG, and each system prompt, presenting the average latency and standard deviation.

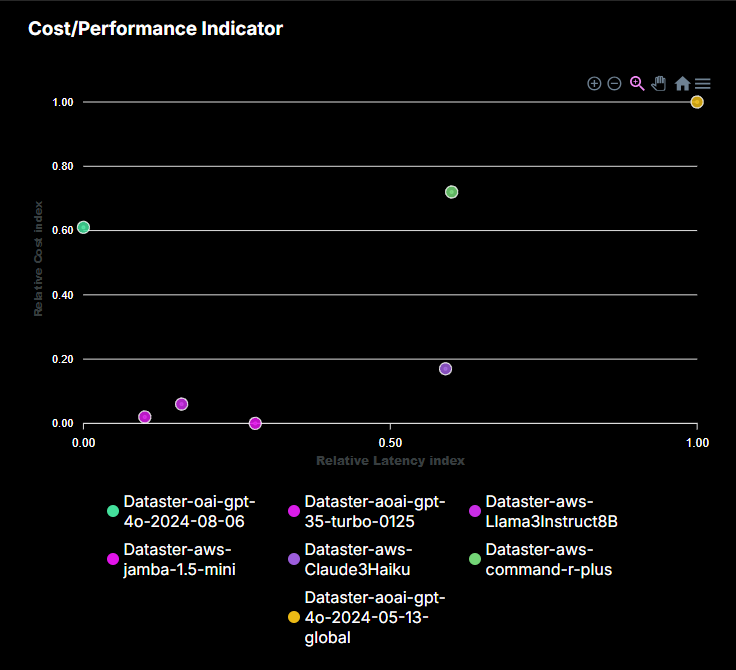

The Latency Cost/Performance Indicator graph provides a visual representation of the trade-off between latency and cost for various models or Retrieval-Augmented Generations (RAGs) tested. Each point on the graph represents a model or RAG, plotted according to its relative latency index (x-axis) and relative cost index (y-axis). The relative latency index is derived from the mean latency of each model, while the relative cost index is calculated based on the specific use case token usage and public pricing for each model. This visualization allows builders to assess whether investing in a more expensive model yields sufficient improvements in latency performance to justify the additional cost.

Conclusion

Dataster's latency test framework empowers builders to accurately assess the performance of their GenAI applications in real-world scenarios. By allowing extensive testing across various RAG combinations and LLMs, Dataster ensures that developers can identify the optimal setup for their specific use case. The addition of the Latency Cost/Performance Indicator further enhances this capability by providing insights into the cost-efficiency of different models and RAGs. This comprehensive approach helps deliver timely and reliable outputs, ultimately enhancing the user experience and the overall success of real-time GenAI applications.

If you encounter any issues or need further assistance, please contact our support team at support@dataster.com.