Dataster Documentation

Dataster helps you build Generative AI applications with better accuracy and lower latency.Run an Automated Evaluation Test

Dataster provides a robust automated evaluation framework that empowers builders to rigorously assess the quality of their GenAI applications' outputs across their entire use case. This framework can handle hundreds of prompts, sending them to various Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) systems.

Prerequisites

- A Dataster account.

- One or more user prompts grouped in a use case.

- To be included in an automated evaluation, the prompts must have a ground truth.

- One or more system prompts part of the same use case as the user prompts.

- One or more LLMs. Dataster provides off-the-shelf LLMs that can be used for performance testing.

- Optionally, one or more RAGs.

Step 1: Navigate to the Human Evaluation Page

- Navigate to the Automated Evaluation page by clicking "Auto Evaluation" in the left navigation pane.

Step 2: Select User Prompts

- Select the use case to use for testing.

- The interface indicates how many user prompts have been created for this use case.

- One use case must be selected.

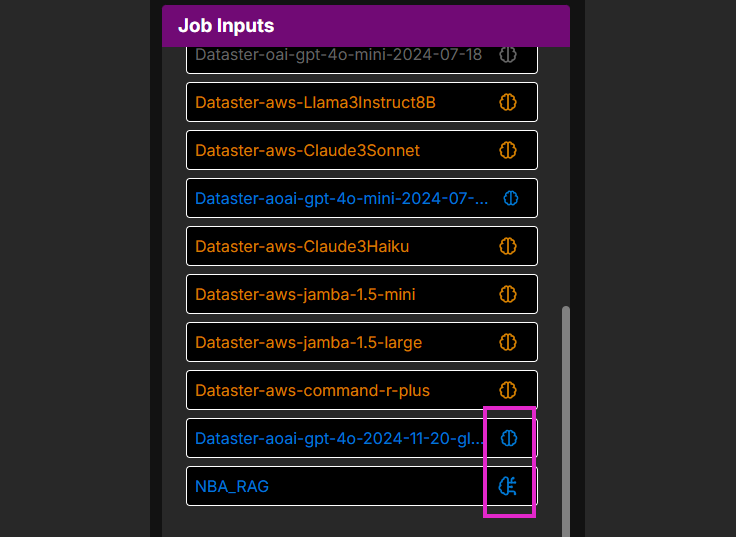

Step 3: Select LLMs and RAGs

- Select the LLMs to use for testing.

- Select the RAGs to use for testing.

- At least one RAG or one LLM must be selected.

- LLMs and RAGs are indicated by different icons.

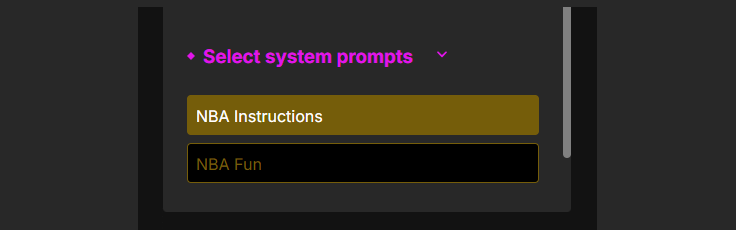

Step 4: Select System Prompts

- Select one or more system prompts for the use case.

- At least one system prompt must be selected.

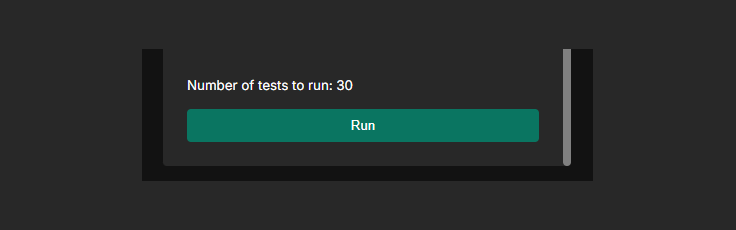

Step 5: Run the Automated Evaluation Job

- The user interface indicates how many tests will be run.

- Click Run.

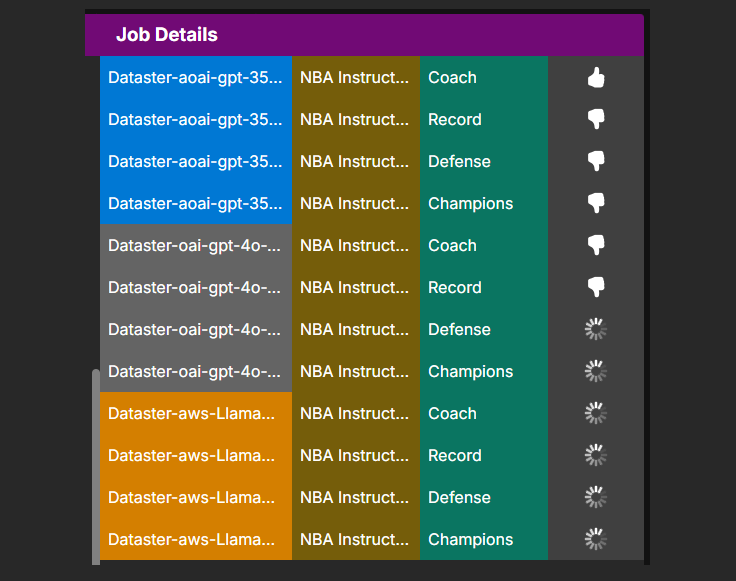

Step 6: Automated Evaluation Job Execution

- The user interface displays each test execution.

- Upon test completion, the output quality evaluation is displayed (thumb up or down).

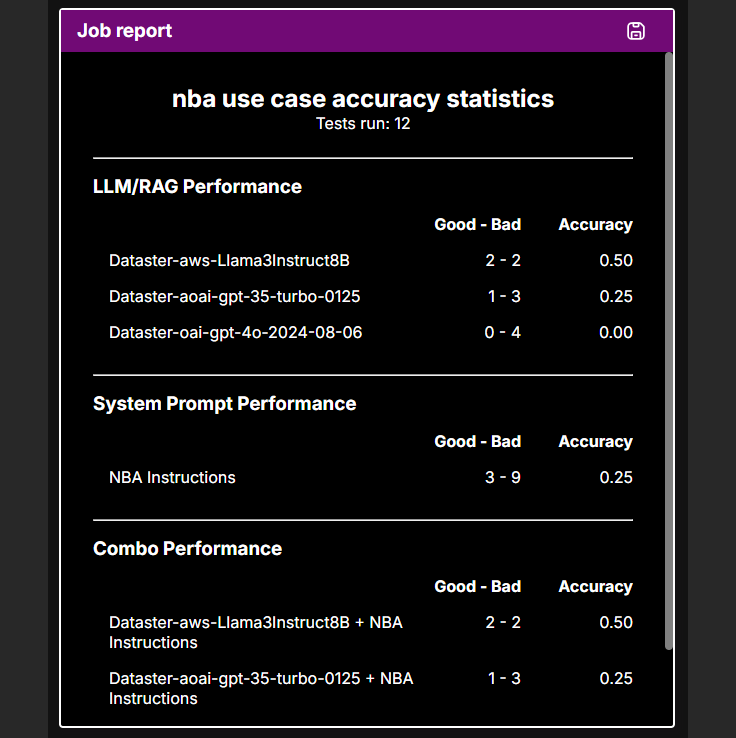

Step 7: Observe the Results

- After all the tests are complete, the consolidated results are displayed.

- For each model and RAG, the average score is displayed.

- For each system prompt, the average score is displayed.

- For each combination of model, RAG, and system prompt, the average score is displayed.

- Optionally, save the job results.

Conclusion

You have successfully run an automated evaluation test in Dataster. This allows you to measure the performance of your use case and make informed decisions to optimize output quality.

If you encounter any issues or need further assistance, please contact our support team at support@dataster.com.