Solutions for GenAIOps, LLMOps and Evaluation

Dataster helps you build Generative AI applications with better accuracy and lower latency.

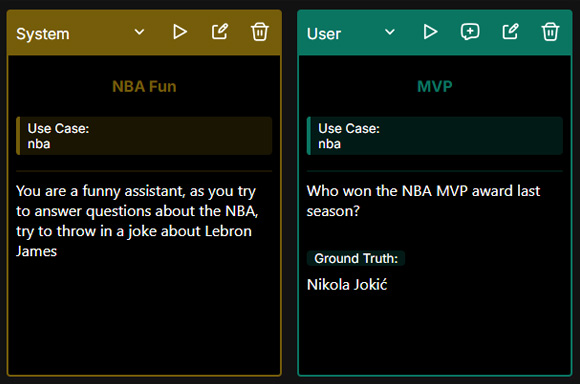

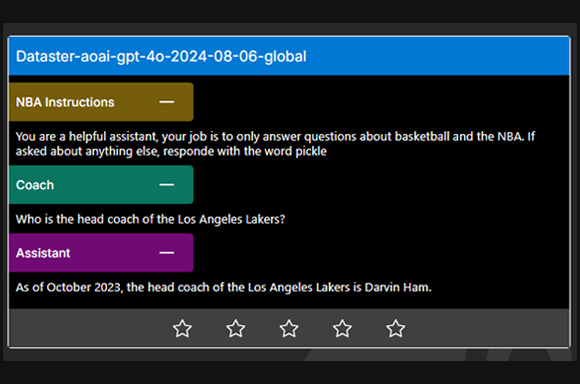

Effortless prompt management

Prompt management in GenAI applications involves designing, refining, and organizing prompts to guide AI models effectively. It includes crafting precise instructions, iterating and versioning prompts, evaluating and optimizing their performance, integrating with tools for easier management, and ensuring ethical considerations are addressed. This process is essential for producing high-quality, contextually appropriate AI outputs. Dataster offers a versatile prompt catalog that supports system, user, and assistant prompts. Organize your prompts by use case and evaluate them either individually or collectively. With a single click, test them in the LLM playground or use a prompt set to measure the average latency of models on your specific use case.

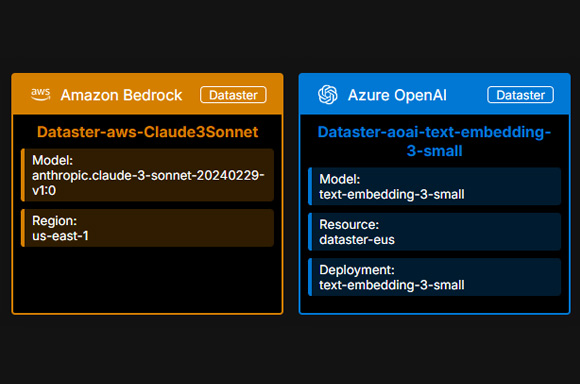

Multicloud LLM management

LLM (Large Language Model) management involves overseeing the lifecycle of these models to ensure they perform optimally in AI applications. This includes data collection, model training or fine-tuning, testing and validation, integration, deployment, optimization, and monitoring. Effective LLM management ensures models are robust, scalable, and capable of meeting real-world demands. Seamlessly integrate and oversee models across multiple cloud providers in one place with Dataster's LLM catalog, ensuring flexibility and efficiency. This capability allows for streamlined operations and cost management, empowering you to leverage the best resources for your AI applications.

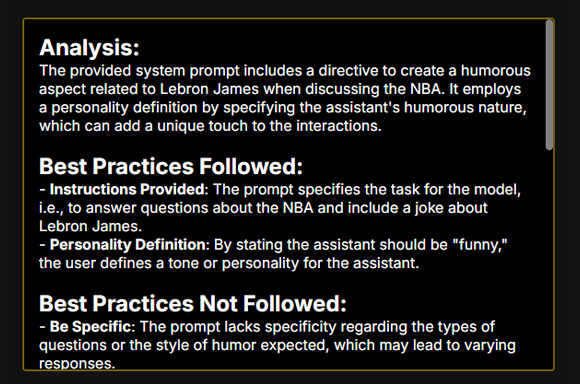

Iterative prompt engineering

Iterative prompt engineering involves continuously refining and improving prompts to enhance AI model performance. This process includes formulating an initial prompt, analyzing the AI's output, identifying areas for improvement, and refining the prompt accordingly. By repeating these steps, practitioners can fine-tune prompts to produce more accurate, relevant, and contextually appropriate outputs. Dataster offers insightful recommendations for your system prompts, helping you identify and implement prompt engineering best practices that might have been overlooked. Additionally, Dataster provides a grading system with each iteration, ensuring that every step you take moves your application in the right direction.

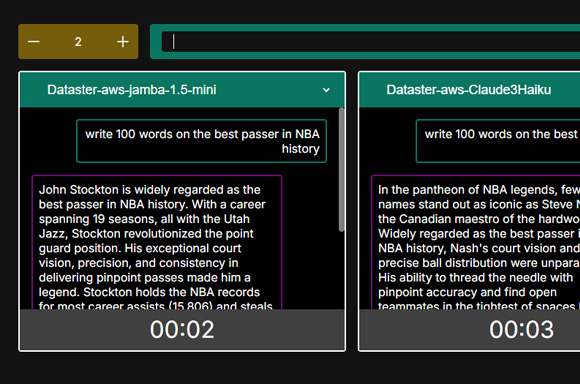

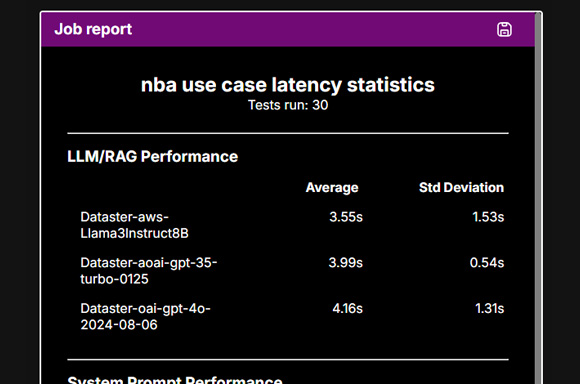

RAG and LLM latency comparison

Comparing the latency of multiple LLMs involves measuring and analyzing the time each model takes to generate responses. By systematically comparing these latencies, developers can identify which models offer the fastest response times, ensuring optimal performance for real-time applications. This comparison helps in selecting the most efficient model tailored to specific use cases, balancing speed with other factors like quality and cost. Put your language models and RAGs to the test on Dataster's racetrack. Compare up to 8 models and RAGs, evaluating their latency with your prompts. Dataster lets you see which model delivers the lowest latency, helping you choose the fastest option for your use case.

RAG and LLM benchmark for latency

Benchmarking the latency of RAG (Retrieval-Augmented Generation) applications and LLMs (Large Language Models) involves assessing and comparing their response times. This process includes measuring key metrics such as the average response time and the standard deviation for both types of systems. By conducting these benchmarks, developers can determine which setups deliver the quickest responses, ensuring efficient performance for real-time applications. Systematically test your entire use case against various combinations of LLMs, RAGs, and system prompts with Dataster. Run latency benchmark jobs to discover the optimal combination that delivers the lowest latency on your specific use case, ensuring the best experience for your users.

Manual or automatic, RAG and LLM benchmark for performance

Benchmarking the accuracy of RAG (Retrieval-Augmented Generation) applications and LLMs (Large Language Models) involves assessing the quality of their outputs. By conducting these benchmarks, developers can identify which systems produce the highest quality outputs, ensuring they meet the desired standards for various applications. This process helps in selecting the most accurate models and configurations, balancing quality with other considerations such as speed and resource efficiency. Conduct manual and automated evaluation jobs using various LLMs, RAGs, and system prompts. Assess the accuracy of responses and develop higher-performing systems tailored to your use cases.